You can retrieve Performance Insights data through an API using the following AWS Command Line Interface (AWS CLI) code:

#POSTGRES LOCK QUEUE SERIES#

The db.load metric is a time series grouped by the PostgreSQL wait events (dimensions). This metric represents the average active sessions for the DB engine. The central metric for Performance Insights is db.load, which is collected every second. When this occurs, it causes the process to wait while in an active state on a lock and not require CPU, which is similar behavior in the buffer_content LWLock case. We found that the Amazon CloudWatch metric DBLoadNonCPU helps predict an incoming episode of buffer content locks because the lock is an active non-CPU event. Monitor and react to buffer_content locks to avoid application interruption The load consumes 20% of the database CPU and process up to 5000 transactions per second (denoted by xact_commit in the diagram) The orders are stored it in Amazon Simple Queue Service (SQS) queue for processing by another type of threads that updates the orders. The load simulated by a cyclic positive sine wave that triggers thread that insert new orders.

#POSTGRES LOCK QUEUE UPDATE#

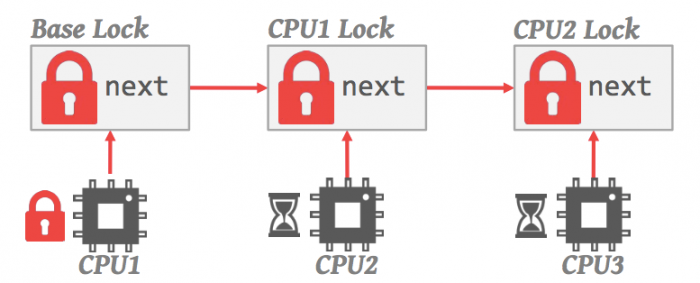

The load simulator mimics a fictitious application ordering system that allows users to place new orders (step 1 and 2 in the diagram) and update the orders throughout the order lifecycle (steps 3 and 4 in the diagram). This caused the database to reduce insert and update throughput and even caused the database to be unresponsive, resulting in application service interruption. We observed accumulations of buffer_content LWLocks during a peak load, where many sessions acquired an exclusive lock instead of a shared lock on a page during insert and update. We ran a benchmark with a load simulator that generated 1,000s transactions per second and 20 TB of data to demonstrate an application workload and data that causes the contention. As a result, developers can see LWLock:buffer_content become a top wait event in Performance Insights and pg_stat_activity. The issue starts when many processes acquire an exclusive lock on buffer content. Also, a shared lock can be acquired concurrently by other processes. Therefore, while holding an exclusive lock, a process prevents other processes from acquiring a shared or exclusive lock.

A process acquires an LWLock in a shared mode to read from the buffer and an exclusive mode to write to the buffer. PostgreSQL utilizes lightweight locks (LWLocks) to synchronize and control access to the buffer content.

The management of the buffers in PostgreSQL consists of a buffer descriptor that contains metadata about the buffer and the buffer content that is read from the disk. PostgreSQL copies data from disk into shared memory buffers when reading or writing data. What is the PostgreSQL buffer_content lightweight lock? Amazon Aurora offers metrics that enable the application developer to react to the locks before impacting the application, and you can adopt various relational database architectural patterns to avoid application interruption caused by the locks.

In addition, we provide configuration samples of discovering potential locks using anomaly detection techniques.Īmazon Aurora is a relational database service that combines the most popular open-source relational databases system and database reliability. We focus on Aurora because the issue manifests under hyper-scaled load in a highly concurrent server environment with 96 vCPUs, 768 GiB with terabytes of online transaction processing (OLTP) data. We also share tips and best practices to minimize the application hot data, whether self-managed, hosted in Amazon Relational Database Service (Amazon RDS) for PostgreSQL or Amazon Aurora PostgreSQL-Compatible Edition. We then propose the patterns to minimize the chance for buffer_content lock contention. In this post, we provide a benchmark that includes a real-world workload with characteristics that lead to the buffer_lock contention. We found that adopting architectural database best practices can help decrease and even avoid buffer_content lock contention. If you have experienced data contentions that resulted in buffer_content locks, you may have also faced a business-impacting reduction of the primary DB throughput (inserts and updates). We have seen customers overcoming rapid data growth challenges during 2020–2021.For customers working with PostgreSQL, a common bottleneck has been due to buffer_content locks caused by contention of data in high concurrency or large datasets.

0 kommentar(er)

0 kommentar(er)